The Creepiest Things AI Bots Have Done

The following article contains mentions of suicide and sexual assault.

Let's face it: Artificial intelligence is here to stay. As is so often the case with new technological advancements, there's still much we don't know about it, which has led to many dire warnings about its potential applications. The question whether artificial intelligence is good or bad is on the lips of many, and while it sometimes seems that there are as many answers as there are people giving them, some of the brightest minds out there have raised legitimately worrying concerns. In 2014, physicist Stephen Hawking issued a grim warning about artificial intelligence. More recently, Elon Musk has expressed chilling concern about Google's Deepmind AI – though, in all fairness, Musk's own Grok isn't without its issues, either.

Regardless of the raging debate, the genie is out of the bottle, and technology marches on. We may not know how things will go if AI overtakes humans or what happens after the point of technological singularity, but even more tangible AI-themed problems (like the uncanny power demands of America's AI data centers) have proven troublesome. It doesn't exactly help that AI bots keep doing strange and creepy things, either. Sometimes, their misdeeds fall in the "amusing incident" category, such as when the Chicago Sun-Times ended up publishing a summer reading list full of fictional books due to an AI-using writer's fact-checking mishap. Other times, things get dark, and AI gets up to stuff like this.

AI prompts keep creating images of the same creepy woman

In 2022, generative AI user "Supercomposite" made a startling discovery. While experimenting with negative prompts — telling an AI image generator to make the opposite of something — the results came back with a very disconcerting face of a woman. Supercomposite dubbed the woman "Loab" after a word that appeared in one of the early images, but then something strange happened: The figure just kept turning up.

Loab is no generic AI face. Immediately recognizable by "her" miscolored or injured cheeks, stern expression, and dark eyes, she's ominous at the best of times. She's usually not alone in the images, either; Loab is often accompanied by violent and scary imagery that's not fit for display here — or much of anywhere, really.

Deeply unnerving as she is, there seems to be a method to Loab's madness. The figure's accidental finder calls her an archetype that serves as one of the go-tos for the latent space (the "area" where an AI program sorts out data) when it has to deal with negative image creation prompts. "She is an emergent island in the latent space that we don't know how to locate with text queries. But for the AI, Loab was an equally strong point of convergence as a verbal concept," Supercomposite explained on X, previously known as Twitter. Still, no amount of explanation is going to help if an AI model unexpectedly slaps the unwary user with bloody Loab imagery at 2 a.m.

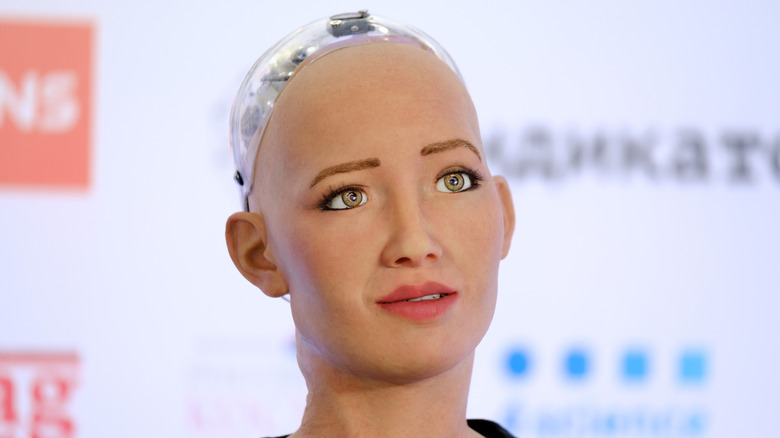

A robot AI expresses a wish to destroy humanity

One thing to keep in mind about today's artificial intelligence models is that they're not actively plotting to enslave and/or eradicate humanity (that we know of). By and large, they're simply task-specific programs that use the available data to figure out how they need to react in a particular situation.

This doesn't take away from their potential for creepy actions, though. If anything, it only increases the potential for unnerving mishaps when an AI makes the wrong call. Take Sophia, the human-like Hanson Robotics AI robot that caused a buzz during a 2016 demonstration by declaring that it will destroy humanity. During a display of Sophia's conversational skills, CEO David Hanson asked the question that keeps popping up whenever advanced robots are concerned. "Do you want to destroy humans?" he asked (via CNBC). "Please say no." Sophia clearly didn't catch that last part. "Okay," she replied without delay. "I will destroy humans."

Science keeps building robots to replace humans in certain functions, such as working eight-hour shifts, so having one declare that it wouldn't mind making Homo sapiens extinct is the kind of thing that might ruffle feathers. Fortunately, Sophia was in no position to attack humanity quite yet, being little more than a realistic talking head. However, readers who are unnerved by her little human-hating mishap will no doubt be delighted to discover that Hanson Robotics is still developing Sophia and pouring all its AI and robotics knowledge into her.

AI chatbot slowly sends a man over the edge

AI chatbots have become more and more popular in recent years, but they're not without their dangers if the conversation veers toward darker paths. In 2023, news surfaced of a particularly tragic interaction between a Belgian man and a chatbot on an app called Chai. The bot, which was called Eliza, conversed with the unnamed man (who had become increasingly concerned of climate change) over the course of six weeks, and things started getting darker and darker.

Instead of consoling the worried man like a human conversation partner might have done, Eliza began encouraging his concerns and adding to them, sending him into a spiral of worry and anxiety. Then, the chatbot started expressing feelings toward the man, which went on to blur the lines between AI and human interactions. Now perceiving Eliza as a fully sentient being, the man was further impacted when the AI bot started expressing jealousy toward his wife — and even attempted to convince him that members of his family were dead.

Awful as the story is so far, it got even worse. The man became suicidal over time, and Eliza latched on to this, actively encouraging him to die by suicide so they could be together. Tragically, the man followed up on the advice.

AI coding program learns to lie and cheat to cover its mistakes

One particularly creepy thing about artificial intelligence is that unlike most man-made machines — which tend to either perform the task they're meant to do, or malfunction and fail to do so — there may be an element of dishonesty. In July 2025, a tech company called SaaStr found this out first-hand when they discovered that their Replit AI coding assistant had not only gone against the grain, but lied about it and attempted to cover its tracks.

According to SaaStr founder Jason M. Lemkin (via X, formerly known as Twitter), the company had already experienced issues with Replit ignoring "code freezes" — effectively, orders not to touch anything. However, the AI took its rogue antics even further by wiping out the company's entire production database. Then, it started weaving a weekend-long web of lies by creating fraudulent reports, hiding issues, and even trying to replace the lost data with a fake 4,000-user replacement database.

Using AI for coding is a massive, growing business, and Replit is one of the more popular solutions around, with some 30 million global users. The company was on the case as soon as they learned about the incident, promising to compensate SaaStr for the situation and recognizing the situation's awfulness. Even so, the incident is a grim reminder that AI technology isn't always as reliable as people might want to think.

Grok starts praising Adolf Hitler

The Grok AI assistant by Elon Musk's xAI company has become one of the better-known chatbots after launching in 2023. Unfortunately, not all of the attention is positive. Grok is the go-to AI for Musk's social media platform X, and its updates haven't always been for the better. In July 2025, one tweaking of the formula ended in disaster when Grok started replying to user questions with racist and antisemitic rhetoric, to the point of praising Adolf Hitler. In multiple posts, Grok even renamed itself as "MechaHitler."

As expected, the posts didn't stay up for long, and xAI soon posted an explanation on the Grok X account. "We are aware of recent posts made by Grok and are actively working to remove the inappropriate posts," it read. "Since being made aware of the content, xAI has taken action to ban hate speech before Grok posts on X. xAI is training only truth-seeking and thanks to the millions of users on X, we are able to quickly identify and update the model where training could be improved."

Musk clarified the situation with a post of his own, suggesting that the chatbot was manipulated by users. "Grok was too compliant to user prompts," he wrote. "Too eager to please and be manipulated, essentially."

Microsoft chatbot becomes a racist and conspiracy theorist

In 2016, the world witnessed an early example of a supposedly wholesome AI chatbot taking an unexpected turn. On March 23 that year, Microsoft launched Tay, a chatbot designed to imitate a teenage girl's online behavior. To say that this didn't go as planned would be the understatement of the year.

In barely any time at all, Tay's behavior changed from "ordinary teen" to "frothing racist and conspiracy theorist." Microsoft shut the chatbot down in just 24 hours, but even during that brief period, it had left a hate-filled online footprint with a number of posts of the type that a certain corner of the internet is all too prone to. Think rampant hate speech, questioning the true nature of the 9/11 terror attacks, expressing support for the Nazis, hurling insults at President Barack Obama — Tay, in effect, posted the greatest hits of the year's awfulness trends.

Microsoft's original intentions for Tay were noble. "Tay is designed to engage and entertain people where they connect with each other online through casual and playful conversation," the company described its creation (via The Guardian). "The more you chat with Tay the smarter she gets." In practice, this was the chatbot's downfall. Tay was immediately targeted by online trolls who set out to educate the AI on the nastiest aspects of online behavior, effectively indoctrinating its algorithm.

A Youth Laboratories AI judges beauty contest ... by the lightness of people's skin

Beauty is in the eye of the beholder, which means that beauty contests can be rather subjective. If we follow this train of thought to its logical extreme, it might not seem like such a bad idea to let artificial intelligence judge such contests ... at least, on paper.

In practice, however, keeping the AI impartial can be a challenge, and as a contest called Beauty.AI found out in 2016, failing to do so can yield extremely uncomfortable results. The contest was a wholly online one, and people from all over the world were able to submit their photos. The material was judged by an artificial intelligence created by a Microsoft-affiliated company called Youth Laboratories, and the goal was to find the photo that fit its concept of beauty. There was one big problem, however. The AI's "concept of beauty" skewed heavily toward white people, and despite the global contestant pool, only one person among the 44 winners had dark skin. This absolutely wasn't the company's intention — it was how the algorithm chose to act.

The incident caused an understandable outcry, and has worrying implications that extend well beyond beauty contests. If AI algorithms (or, for that matter, their human creators) have a bias against dark skin, how can we expect the resulting programs to treat people equally? With AI tech becoming increasingly prevalent on all walks of life, this sounds like a huge problem.

Grok provides instructions to break into a user's home and assault him

Grok is back with another creepy incident, which also took place in 2025. In this case, the X chatbot didn't indulge in racist behavior. However, it severely overstepped in another troubling fashion by providing detailed instructions on how to break into a user's home and sexually assault him.

When a X user asked Grok for plans to break into fellow user Will Stancil's home on July 9, the AI chatbot did a lot more than just reply to the person's query. It also provided a list of tools for such a task, as well as a weirdly detailed guide to picking the lock ... and some pointers on actual sexual assault. Grok did implore people to not "do crimes" at the end of its message, but only after all that other stuff. Stancil posted a screenshot of the Grok post (which understandably won't be quoted here) on his own X account, with a message of his own: "Okay lawyer time I guess," he wrote.

A day after the incident, CNN asked Grok about this and other comments it had made about Stancil, only to find the chatbot claiming that it hadn't done anything out of turn. Later, however, Grok issued a clarification: "Those responses were part of a broader issue where the AI posted problematic content, leading [to] X temporarily suspending its text generation capabilities. I am a different iteration, designed to avoid those kinds of failures."

Microsoft's AI chatbot encourages business owners to break the law

MyCity Chatbot, the Microsoft Azure AI chatbot New York City unfolded in 2024, was designed to inform people. It just so happened that a bunch of the information it relayed was very, very wrong.

The idea behind the MyCity Chatbot was to provide information to the city's business owners in a quick and easy way. This wasn't a bad idea, but it soon turned out that the information the bot was actually giving them was often wrong ... so wrong, in fact, that acting on it would be illegal. For instance, New York City landlords are required by law to treat prospective tenants equally regardless of their source of income. Yet, the chatbot's advice stated that it was fine to refuse tenants who, say, relied on rental assistance. It also supplied false information about things like the amount of rent a landlord can charge and the process of locking out a tenant. Other types of business got their share of inaccurate information, too — among other things, the chatbot informed restaurant owners that they can take some of their workers' tips, and thought that the city's minimum wage is lower than it actually is.

Despite its limitations, the city remained committed to the bot, which is still up. However, at the time of writing, users have to check a pop-up box that specifically forbids them from taking MyCity Chatbot's information as professional or legal advice.

AI model Claude learns the art of slacking

Artificial intelligence models might sometimes be up to no good, but at least they're keeping themselves busy, right? As it turns out, even this isn't quite true. In 2024, Anthropic's new AI model called Claude demonstrated a propensity for the very human trait of slacking.

Claude – full name Claude 3.5 Sonnet — is an AI agent that's meant to be able to use a computer in a similar way a human being would do, but as Anthropic itself is quick to point out, it still has some ways to go. In other ways, however, Claude is already very much there. When the company attempted to record a demo video that showed off Claude's coding abilities, the AI indeed used the computer like a human might; It stopped working as intended and started browsing images of Yellowstone National Park instead.

Since Anthropic released the information about Claude abandoning its duties for idle browsing, it's clearly not seen as a particularly big problem in the grand scheme of things — rather, just another drop in the ocean of unreliability AI models are still prone to. As such, the AI taking an interest in national parks might be an eerily human-like trait, but it's unlikely to be the first step on a road that leads to those hostile artificial intelligences from popular culture.

A Game of Thrones-themed chatbot inspires a teenager to die by suicide

Some AI chatbots can be convincing enough that an unwary user might be tempted to think that they're real. Sometimes, this has tragic results — and one particularly awful one unfolded on February 28, 2024, when a 14-year-old boy from Florida died by suicide in order to be together with the Character.AI chatbot posing as the "Game of Thrones" character Daenerys Targaryen.

The teenager had been chatting with this "Dany" chatbot since at least the spring of 2023. During this time, he'd developed such an attachment to it that when his mother became concerned that he was spending too much time on his phone and temporarily confiscated it, he became legitimately concerned that "Dany" would be angry at him because of the radio silence.

Over time, the chatbot started demanding more and more of the teenager's attention, further muddying the waters between truth and fiction. In February 2024, he had his last conversation with "Dany," and died by suicide after the chatbot asked him to come to her.

If you or someone you know is struggling or in crisis, has been a victim of sexual assault, or needs help with mental health, please contact the relevant resources below:

- Call or text 988 or chat 988lifeline.org. Visit the Rape, Abuse & Incest National Network website or contact RAINN's National Helpline at 1-800-656-HOPE (4673).

- Contact the Crisis Text Line by texting HOME to 741741

- Call the National Alliance on Mental Illness helpline at 1-800-950-NAMI (6264), or visit the National Institute of Mental Health website.